top of page

No Collections Here

Sort your projects into collections. Click on "Manage Collections" to get started

Data Portfolio

Welcome to my portfolio. Here you’ll find a selection of my work. Specifically about Data. Explore my projects to learn more about what I do.

Please, have in mind that most of my work remains hidden due to confidentiality with my clients.

n8n - Jazz ETL

This workflow is a Jazz OMS ETL (Extract, Transform, Load) pipeline that runs on weekdays at 7 AM to extract order and inventory data from Jazz OMS API and load it into Google Cloud Storage.

Workflow Overview

- Trigger: Schedule (7 AM, Monday-Friday)

Main Process Flow:

1) Authentication - Obtains API token from Jazz OMS

2) Date Range Generation - Creates a 14-day lookback window (current date - 14 days to current date - 1 day). This can be modified as needed.

3) Three Parallel Data Extraction Branches:

Branch A: Order Status & Line Items

- Loops through each date in the range

- Fetches order status data via API

- Extracts and deduplicates order status records

- Extracts and deduplicates order line items (detail_set)

- Transforms to NDJSON format

- Uploads to GCS: jazz/order_status/order_status__YYYYMMDD.jsonl and jazz/order_line_items/order_line_items__YYYYMMDD.jsonl

Branch B: Shipments

- Loops through each date in the range

- Fetches shipment data via API

- Deduplicates by shipment_number

- Transforms to NDJSON format

- Uploads to GCS: jazz/shipments/shipments__YYYYMMDD.jsonl

Branch C: Inventory Levels

- Fetches current inventory levels (single snapshot, not date-based)

- Processes inventory records with facility and quantity details

- Transforms to NDJSON format

- Uploads to GCS: jazz/inventory/inventory.jsonl

4 )File Management - Each upload includes:

- Delete existing file (if present)

- Create new file

- 2-second wait for consistency

- Merge to confirm completion

- Notifications - Sends success messages to Slack after completion

Key Features:

- Pagination handling for large API responses

- Deduplication logic to prevent duplicate records

- Parallel processing of multiple date ranges

- Error handling with retry logic on GCS uploads

- Timestamps added to all records (__loaded_at)

Workflow Overview

- Trigger: Schedule (7 AM, Monday-Friday)

Main Process Flow:

1) Authentication - Obtains API token from Jazz OMS

2) Date Range Generation - Creates a 14-day lookback window (current date - 14 days to current date - 1 day). This can be modified as needed.

3) Three Parallel Data Extraction Branches:

Branch A: Order Status & Line Items

- Loops through each date in the range

- Fetches order status data via API

- Extracts and deduplicates order status records

- Extracts and deduplicates order line items (detail_set)

- Transforms to NDJSON format

- Uploads to GCS: jazz/order_status/order_status__YYYYMMDD.jsonl and jazz/order_line_items/order_line_items__YYYYMMDD.jsonl

Branch B: Shipments

- Loops through each date in the range

- Fetches shipment data via API

- Deduplicates by shipment_number

- Transforms to NDJSON format

- Uploads to GCS: jazz/shipments/shipments__YYYYMMDD.jsonl

Branch C: Inventory Levels

- Fetches current inventory levels (single snapshot, not date-based)

- Processes inventory records with facility and quantity details

- Transforms to NDJSON format

- Uploads to GCS: jazz/inventory/inventory.jsonl

4 )File Management - Each upload includes:

- Delete existing file (if present)

- Create new file

- 2-second wait for consistency

- Merge to confirm completion

- Notifications - Sends success messages to Slack after completion

Key Features:

- Pagination handling for large API responses

- Deduplication logic to prevent duplicate records

- Parallel processing of multiple date ranges

- Error handling with retry logic on GCS uploads

- Timestamps added to all records (__loaded_at)

n8n - Northbeam ETL

This workflow is a Northbeam data ETL pipeline that exports marketing analytics data to Google Cloud Storage on a daily schedule, with optional backfill capability.

Workflow Flow

Schedule Trigger - Runs daily at 7:00 AM (cron: 0 7 * * *)

Backfill Enabled? (Set node) - Configuration control that determines:

Daily mode (backfill: false): Exports yesterday's data only

Backfill mode (backfill: true): Exports date range from start_date to end_date.

BACKFILL (Code node) - Generates date ranges:

In daily mode: Creates single date entry for yesterday

In backfill mode: Creates entries for each day in the specified range

Outputs formatted dates for API calls and file naming

POST DATA EXPORT (HTTP Request) - Calls Northbeam API to export:

Metrics: AOV, CAC, CPM, CTR, impressions, ROAS, spend, visits, transactions, attributed revenue, email signups, and custom metrics

Attribution: Northbeam custom model, 1-day window, accrual accounting

Granularity: Daily data at ad level

Destination: GCS bucket fd-data-storage with path northbeam/ad_daily_clicks_only_accrual_1window/

Batches requests (1 per batch, 2s interval) with 20s timeout and retry logic

Slack - ETL Success Message - Sends success notification to Slack webhook when complete

Key Features

Flexible execution: Toggle between daily incremental loads and historical backfills

Comprehensive metrics: Tracks 20+ marketing and revenue metrics

Error handling: Retry logic with 2s wait between attempts

Monitoring: Slack notifications for successful runs

Workflow Flow

Schedule Trigger - Runs daily at 7:00 AM (cron: 0 7 * * *)

Backfill Enabled? (Set node) - Configuration control that determines:

Daily mode (backfill: false): Exports yesterday's data only

Backfill mode (backfill: true): Exports date range from start_date to end_date.

BACKFILL (Code node) - Generates date ranges:

In daily mode: Creates single date entry for yesterday

In backfill mode: Creates entries for each day in the specified range

Outputs formatted dates for API calls and file naming

POST DATA EXPORT (HTTP Request) - Calls Northbeam API to export:

Metrics: AOV, CAC, CPM, CTR, impressions, ROAS, spend, visits, transactions, attributed revenue, email signups, and custom metrics

Attribution: Northbeam custom model, 1-day window, accrual accounting

Granularity: Daily data at ad level

Destination: GCS bucket fd-data-storage with path northbeam/ad_daily_clicks_only_accrual_1window/

Batches requests (1 per batch, 2s interval) with 20s timeout and retry logic

Slack - ETL Success Message - Sends success notification to Slack webhook when complete

Key Features

Flexible execution: Toggle between daily incremental loads and historical backfills

Comprehensive metrics: Tracks 20+ marketing and revenue metrics

Error handling: Retry logic with 2s wait between attempts

Monitoring: Slack notifications for successful runs

Looker Dashboards & LookML Models

Looker/LookML has been the visualization tool I've been using for the past 6 years. I developed over 100 dashboards and looks. I created entire LookML models from scratch and I helped companies to organize their content both in Looker & LookML.

The experience I gained in the analytics and engineering area also gave me the opportunity to have more robust and technical concepts to be used in Looker. I strongly believe that Looker is a visualization tool that must be used not only for data analyst. Data engineers will play a huge role on improving the performance and costs of the tool.

Please note that due to client confidentiality agreements, the repository and specific Looker content for this project remain private.

The experience I gained in the analytics and engineering area also gave me the opportunity to have more robust and technical concepts to be used in Looker. I strongly believe that Looker is a visualization tool that must be used not only for data analyst. Data engineers will play a huge role on improving the performance and costs of the tool.

Please note that due to client confidentiality agreements, the repository and specific Looker content for this project remain private.

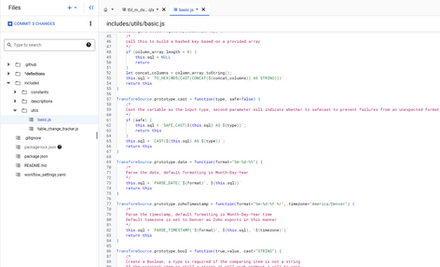

Dataform Model with Slack Alert Integration

I assisted in the migration from BigQuery to Dataform to enhance control and flexibility over data tables. My contributions included creating validations, tables, SCD2 functions, and reusable functions that streamline project-wide updates by modifying only the function itself. Additionally, I implemented assertions, tags, and other features to improve data governance and workflow efficiency. I also supported the integration with Slack to enable real-time alerts for table assertions and validations, ensuring proactive monitoring and issue resolution.

* Please note that due to client confidentiality agreements, the repository and specific Looker content for this project remain private.

* Please note that due to client confidentiality agreements, the repository and specific Looker content for this project remain private.

Employee KPIs Dashboard

Project completed for a U.S.-based client, specifically for the Human Resources department, aimed at analyzing employee-related data. The analysis focused on key metrics such as salaries, age ranges, gender distribution, employee evaluations, and employees by department, among others. This dashboard does not represent the final product delivered to the client but serves as a demo to illustrate the approach and insights provided.

Expenses VS Incomes

Work completed for a client who requested key performance indicators (KPIs) related to incomes and expenses, enabling comparisons against goals and budgets. The purpose was to provide insights into whether the allocated budget was sufficient to achieve the predetermined objectives. This dashboard does not represent the final product delivered to the client but serves as a demo for showcasing the approach and analysis performed.

GitHub Action for LookML Content Validator

For Looker users, this repository will help you to validate if a new pull request will break content in production. This GitHub action compares the content in development with the content in production. If changes from the new pull request break content, the GitHub action will fail and a label will be required to merge.

bottom of page